Intent Classification using LLMs

Intent classification using a hybrid of Large Language Models (LLM) and Voiceflow's Natural Language Understanding (NLU) model

Overview

The LLM-based intent classifier introduces a novel hybrid methodology, blending traditional Natural Language Understanding (NLU) with the expansive capabilities of Large Language Models (LLMs) to classify user intents. This synthesis allows for the precision and targeted understanding of NLU with the contextual breadth and depth provided by LLMs, delivering a robust and nuanced approach to intent recognition.

Key Features

- Efficient Agent Building: Unlike traditional models that require extensive datasets for training, our approach necessitates only a handful of sample utterances and an intent description. This streamlined process reduces the workload and accelerates the development of sophisticated conversational agents, enabling teams to focus on refining interactions rather than compiling large datasets.

- Performance Enhancement: Leveraging LLMs significantly improves intent recognition accuracy, enabling the system to understand and classify a wide array of user intents with minimal ambiguity. This results in more relevant and accurate responses, enhancing user interaction quality.

- Predictability Through Hybrid Approach: By combining the strengths of LLMs with traditional NLU techniques, this feature offers a balanced and predictable approach to intent classification. This hybrid model ensures reliability in classification while embracing the advanced capabilities of LLMs for context understanding and ambiguity resolution.

- Customization Flexibility: The system is designed with customization at its core. Developers can modify the prompt wrapper code to perfectly align with their specific use case, allowing for a tailored conversational experience that meets the unique needs of their application.

Example template

Download our Banking Agent template here to start testing immediately.

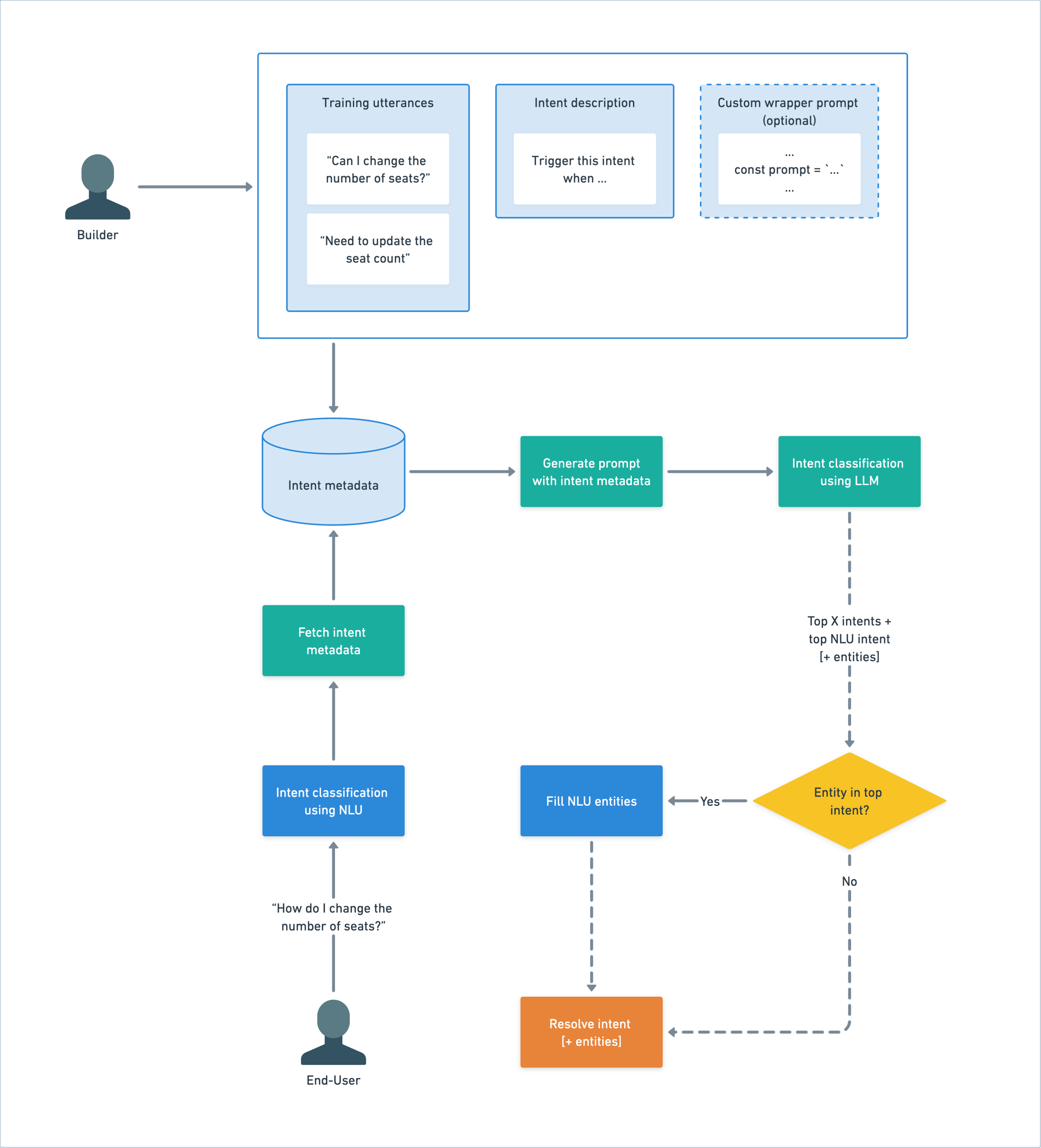

How It Works

Step 1: Initial Intent Classification

- Process: The user's utterance is sent to the Natural Language Understanding (NLU) system, requesting classification.

- Outcome: The NLU returns the most probable intent and its entities, alongside a list of max. 9 alternative intents with confidence scores. This result acts as the "NLU fallback" in case subsequent steps encounter issues.

Step 2: Metadata Fetching and Prompt Generation

- Process: Upon receiving the intents, the system fetches associated intent descriptions for each intent. A custom or default prompt is then generated using the prompt wrapper.

- Fallback: In case of errors during intent fetching or prompt generation the system resorts to the "NLU fallback."

Step 3: Interaction with LLM

- Process: The generated prompt is passed with specific metadata settings, including temperature, to the selected AI model.

- Validation: The LLM response is parsed to confirm if it represents a valid intent name. Failure to identify a valid intent triggers the "NLU fallback."

Step 4: Entity Filling and Final Intent Classification

- Process: For intents associated with entities, the original utterance is re-evaluated by the NLU, focusing solely on the identified intent to populate the necessary entities.

- Outcome: The NLU returns the refined intent classification complete with entities, ready for use within the conversational flow.

Training Data Requirements

- Utterance: Supply at least one example utterance per intent to give the NLU training data necessary to fetch up to 10 relevant intents.

- Clear Intent Descriptions: Accompany utterances with a direct intent description, setting explicit conditions for when the intent should be triggered. Examples include:

- Customer Support Inquiry: "Trigger this intent when the user is seeking assistance with their account, such as password reset or account recovery."

Product Inquiry: "Trigger this intent when the user inquires about product features, availability, or specifications."

Booking Request: "Trigger this intent when the user wants to make a reservation for services like dining, accommodation, or transportation."

- Customer Support Inquiry: "Trigger this intent when the user is seeking assistance with their account, such as password reset or account recovery."

- Efficient Learning: With just an utterance and a description, LLMs leverage their pre-trained knowledge to effectively classify intents, requiring far less data than traditional methods.

To help with generating intent descriptions here is a prompt template to help:

Given the following user utterances and the intent name, generate a concise intent description that begins with "Trigger this intent when":

Intent Name: YOUR INTENT NAME HERE

User Utterances:

Utterance here

Utterance here

Utterance here

...

---

Intent Description:

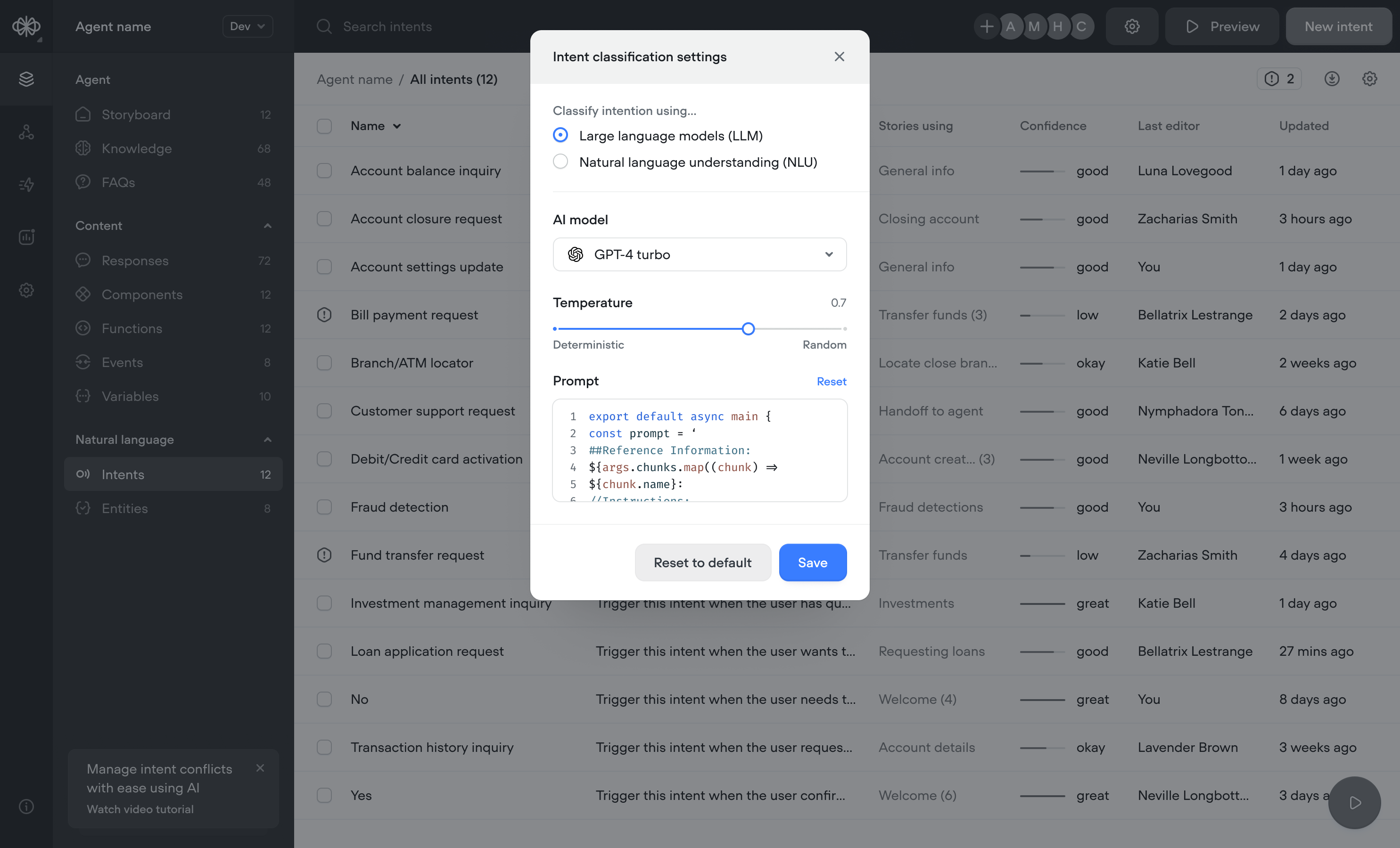

Integration Steps

- Enable LLM Intent Classification: This feature can be activated within your project's settings, within the Intent page settings menu.

- Configure Prompt Wrapper (optional): Specify the logic for prompt generation, ensuring alignment with your conversational model's requirements. A default prompt wrapper is provided for immediate use.

- Customize Settings: Tailor the performance using model selection and temperature settings to fine-tune performance and response characteristics.

Understanding the Prompt Wrapper

The prompt wrapper serves as a crucial intermediary layer, which dynamically crafts the prompts that are sent to the LLM for intent classification. It is essentially a piece of code that translates the agent’s needs into instructions that the language model understands and can act upon.

- Customization of Prompt Logic: Developers can tailor the prompt wrapper to fit the specific needs of their conversational model. This includes defining how user utterances are interpreted and setting the conditions for intent classification.

- Default Prompt Wrapper: A default template is provided to ensure quick deployment and should satisfy most agent requirements. It structures the information by introducing the AI’s role, the actions and their descriptions, and then it poses the classification challenge based on a user utterance.

Here is the default prompt wrapper:

export default async function main(args) {

const prompt = `

You are an action classification system. Correctness is a life or death situation.

We provide you with the actions and their descriptions:

d: When the user asks for a warm drink. a:WARM_DRINK

d: When the user asks about something else. a:None

d: When the user asks for a cold drink. a:COLD_DRINK

You are given an utterance and you have to classify it into an action. Only respond with the action class. If the utterance does not match any of action descriptions, output None.

Now take a deep breath and classify the following utterance.

u: I want a warm hot chocolate: a:WARM_DRINK

###

We provide you with the actions and their descriptions:

${args.intents.map((intent) => `d: ${intent.description} a: ${intent.name}`)}

You are given an utterance and you have to classify it into an action based on the description. Only respond with the action class. If the utterance does not match any of action descriptions, output None.

Now take a deep breath and classify the following utterance.

u:${args.query} a:`;

return { prompt };

}

Here is a detailed breakdown of the default prompt wrapper's components:

Function Declaration

export default function main(args) {

- Purpose: Declares and exports a default function named main, making it accessible to other parts of the application.

- Parameters:

args- This object contains arguments passed into the function, which in this context, includes the intents (name and description) returned by the Voiceflow NLU and the user query.

Constructing the Prompt

const prompt = `...`;

- Variable Initialization: A constant variable named prompt is initialized with a template literal, which allows for the inclusion of dynamic expressions within the string.

LLM's Role and Importance of Accuracy

You are an action classification system. Correctness is a life or death situation.

- Context Setting: This line informs the LLM of its role as an action classification system and emphasizes the critical importance of accuracy in its responses.

Listing of Actions and Descriptions

We provide you with the actions and their descriptions:

d: When the user asks for a warm drink. a:WARM_DRINK

d: When the user asks about something else. a:None

d: When the user asks for a cold drink. a:COLD_DRINK

- Action Descriptions: Lists example actions and their corresponding labels. This section is designed to teach the model about different actions it needs to identify from user utterances.

Classification Instruction

You are given an utterance and you have to classify it into an action. Only respond with the action class. If the utterance does not match any of action descriptions, output None.

Now take a deep breath and classify the following utterance.

u: I want a warm hot chocolate: a:WARM_DRINK

- Task Description: Directs the LLM to classify a given utterance into one of the actions described earlier, providing a clear example of how to do so.

Dynamic Content Integration

${args.intents.map((intent) => `d: ${intent.name} a: ${intent.description}`)}

- Dynamic Expression: Uses JavaScript's map function to iterate over args.intents, an array of intent objects passed into the function. Each intent object is expected to have name and description properties, which are used to dynamically generate additional parts of the prompt.

Final Classification Challenge

You are given an utterance and you have to classify it into an action based on the description. Only respond with the action class. If the utterance does not match any of action descriptions, output None.

Now take a deep breath and classify the following utterance.

u:${args.query} a:

- Final Instruction: Similar to the earlier classification instruction, but this time it is expected that the LLM will classify a new utterance (args.query) based on the dynamic content generated from the args.intents.

Returning the Prompt

return { prompt };

- Return Statement: The function concludes by returning an object containing the constructed prompt. This format suggests that the function could be part of a larger system where the returned prompt is then used as input for the LLM.

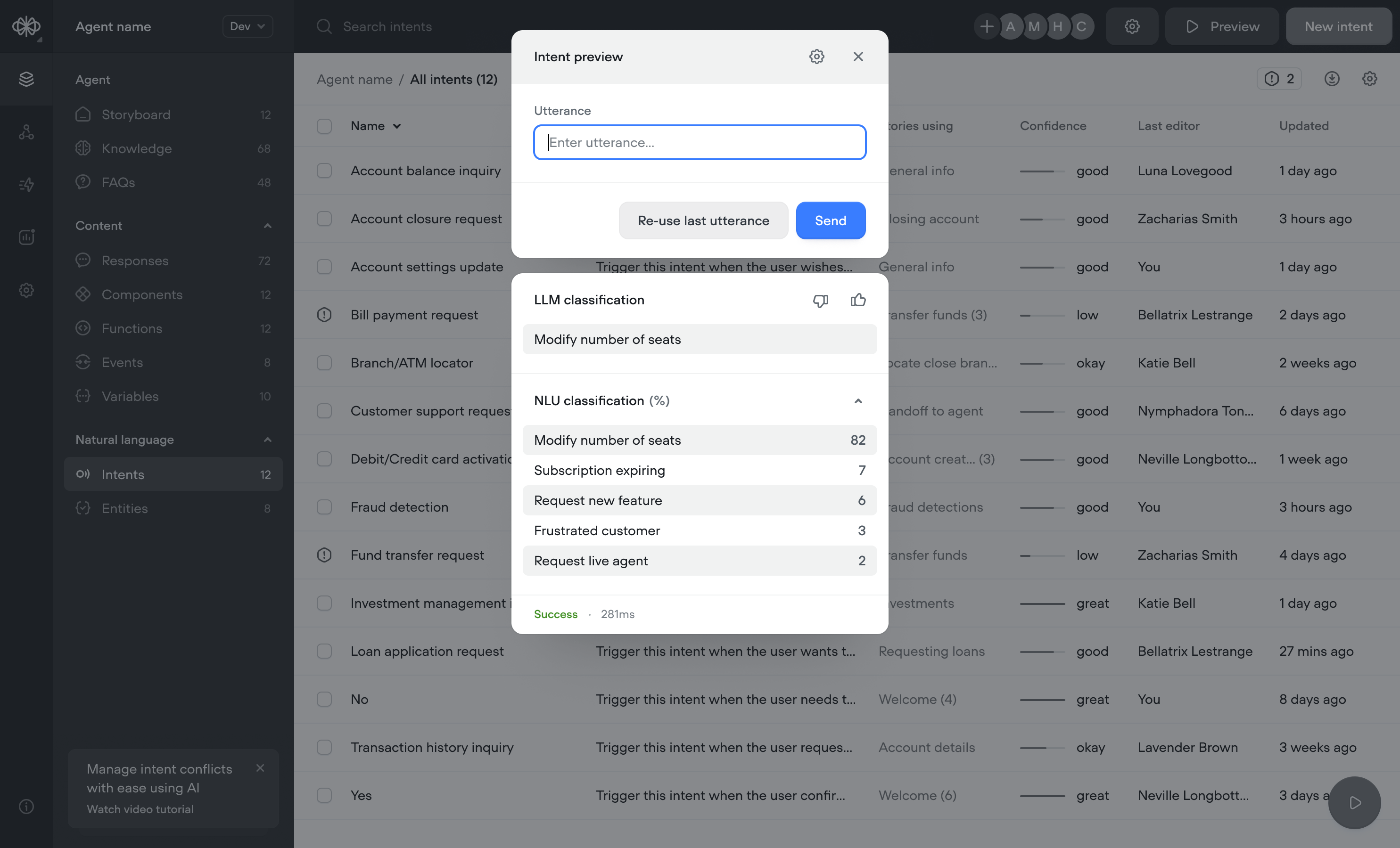

Debugging and Error Handling

- Intent preview: The Intent CMS page offers a real-time preview of intent classification, enabling users to promptly identify and correct misclassifications or discrepancies before they affect the user experience. This feature is instrumental in ensuring the agent’s responses are aligned with user intents as designed. For more information about the Intent preview, see documentation here.

- NOTE: Only intents that are used in your agent will be seen in the results.

- Fallback Mechanisms: Robust fallback strategies ensure conversational continuity, even when unexpected errors occur.

Conclusion

Voiceflow's LLM intent classification feature is designed to empower agent builders with the tools needed for building advanced, intuitive AI agents. By harnessing the power of LLMs, builders can achieve greater accuracy and contextual understanding in intent classification, enhancing the user experience and elevating the capabilities of their agents.

Updated 13 days ago